5G VERIFICATION IS IMPOSSIBLE WITHOUT EMULATION

18.08.2020

The development of 5G technologies has kicked into high gear, and developers are quickly discovering the new challenges that 5G brings.

Many of the prior technologies have been pulled apart, reassembled, and reinvented.

New applications mean many more standards and use cases.

Much of the technology is being virtualized.

Data volumes will be massive due both to new, ubiquitous applications and new technologies like massive MIMO (mMIMO) and beamforming.

There will be multiple architectures depending upon the balance of cost and performance

Performance requirements will be tough: much tighter latency, higher bandwidth, and higher frequencies.

The number of possible combinations of technologies and equipment configurations makes it entirely impractical to build prototypes and then test out their capabilities and resilience. Verification must be done pre-silicon, and there’s only one practical way to accomplish that: hardware emulation.

REINVENTING CELLULAR TECHNOLOGY

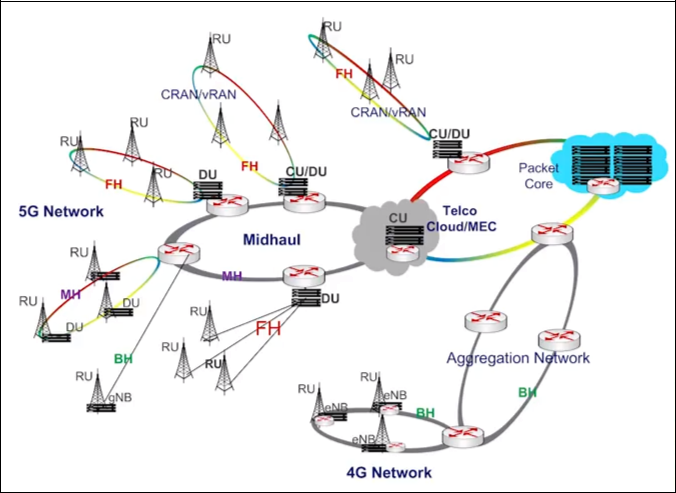

While 5G doesn’t start from a clean slate, it does make significant changes to the 4G architecture. In particular, the radio access network (RAN) has been reimagined as Cloud RAN (sometimes called Centralized RAN), or C-RAN. The backhaul has been split into two, with a centralized unit (CU) handling baseband processing before sending signals to the distributed units (DUs) in the base stations. The connection between the CU and the DUs is now called fronthaul, and, technically, backhaul now occurs between the CU and the core.

Higher-level networking layers are also being virtualized on standard computing equipment for greater flexibility and agility. This network-function virtualization (NFV) and software-defined networking (SDN) implementation makes it possible to configure and reconfigure networks as needed to make optimal use of the available radio antennas and other resources. While adding flexibility and lowering operating costs, these new configurations create the opportunity for many more configurations than would have been possible with 4G and prior, and all of those configurations must be verified.

Figure 1 - 5G backhaul, midhaul and fronthaul based network architecture (Source: Xilinx)

5G AS ALL THINGS FOR ALL PEOPLE

We’re used to smartphones having both voice and data capabilities. And 5G promises to crank up the amount of data that our phones can handle. Data will further be increased by technologies like mMIMO and beamforming. And, rather than discarding signals that would previously have been considered inter-cell interference, those multiple signals – which amount to yet more data – are leveraged for the directionality and greater sensitivity that they provide.

But beyond more traditional voice and data, 5G is intended to take on far more responsibility than prior generations have had. First, it will be a channel for internet-of-things (IoT) devices transporting data to and from the cloud. Those are low-bandwidth applications for commercial IoT devices, but, in the aggregate, it will contributed significantly to traffic levels. Industrial IoT devices may generate far larger amounts of data.

5G will also carry automotive V2X traffic. Cars are expected to transmit huge volumes of data as they drive. Autonomous vehicles will need to communicate with everything around them to keep them safe, and all vehicles – driven or driverless – are likely to send volumes of operational data to the cloud in order to track performance and make improvements.

While this speaks partly to the volumes of expected data, it also adds to the number of use cases that must be considered when proving out new equipment. In order to stress-test equipment using real prototypes, one would have to build hundreds of user equipment units and tens of base stations to anything remotely useful. Having multiple companies building multiple versions of the multiple kinds of equipment needed to prototype real-world systems for testing multiple use cases means an entire cycle of building silicon, building systems to see how they work, and then revising the silicon and building commercial products. That’s a lot of time, effort, and money lost.

Testing systems before committing to silicon provides better coverage, and it does so without the build-test-rebuild cycle. In the best case, you avoid at least one mask turn for each IC in the entire 5G infrastructure.

TESTING MUST BE THOROUGH

The challenge is not just in running a long list of tests; it’s also in pushing boundaries and stressing systems, seeing where they break. Each system will contain one or more SoCs, and each SoC must be run through a variety of realistic verification tests to ensure that, once deployed, it will be up to the many tasks it must perform.

The litany of tests that must be performed include:

- Power: peak, average, and minimum – and compliance with a comprehensive power intent specification

- Latency: minimum and maximum

- Identification of the critical paths in the event that performance changes are needed

- Points of failure when stressed up to and beyond expected limits

- Code coverage: both HLS/RTL code for the hardware and the millions of lines of software code that will make their way into the overall systems

- Fault coverage metrics that identify whether all of the testing has been comprehensive enough

- The inclusion of testing infrastructure (design-for-test, or DFT)

- Physical verification that includes identification and modification of any yield-limiting hot spots on the chip (design-for-manufacturing, or DFM)

While some of those tests can be run on live systems, others – such as critical-path identification, DFT, and DFM – can be performed only on a system that has visibility into the design itself.

ADDING AI TO THE MIX

One new element will be making appearances in a broad range of equipment, with 5G being no exception: artificial intelligence (AI) and machine learning (ML). Architects view ML as a useful tool for a number of sophisticated uses in optimizing the 5G infrastructure in real time. That includes automatic channel estimation for over-the-air (OTA) transmission; the use of self-organizing networks (SON); automated multiple-access handover; and the introduction of coordinated multi-point (CoMP) technology for improved MIMO and diversity.

Systems will be operating with trained neural-network models that are subject to being updated. But the number of options available for processing the neural nets suggests that, not only must a selected option be thoroughly vetted, but alternatives will need to be tested before that final option is decided.

Then there’s model training. The model itself will depend both on the specific examples used for training and the order in which that training occurs. And as more training examples become available, the models can be further refined. While updates can improve models in equipment that’s already deployed, the initial models must themselves be rock solid. That means testing them against an enormous number of examples to ensure that they behave as expected under a wide range of circumstances.

LEVERAGING EMULATION BEFORE SILICON IS BUILT

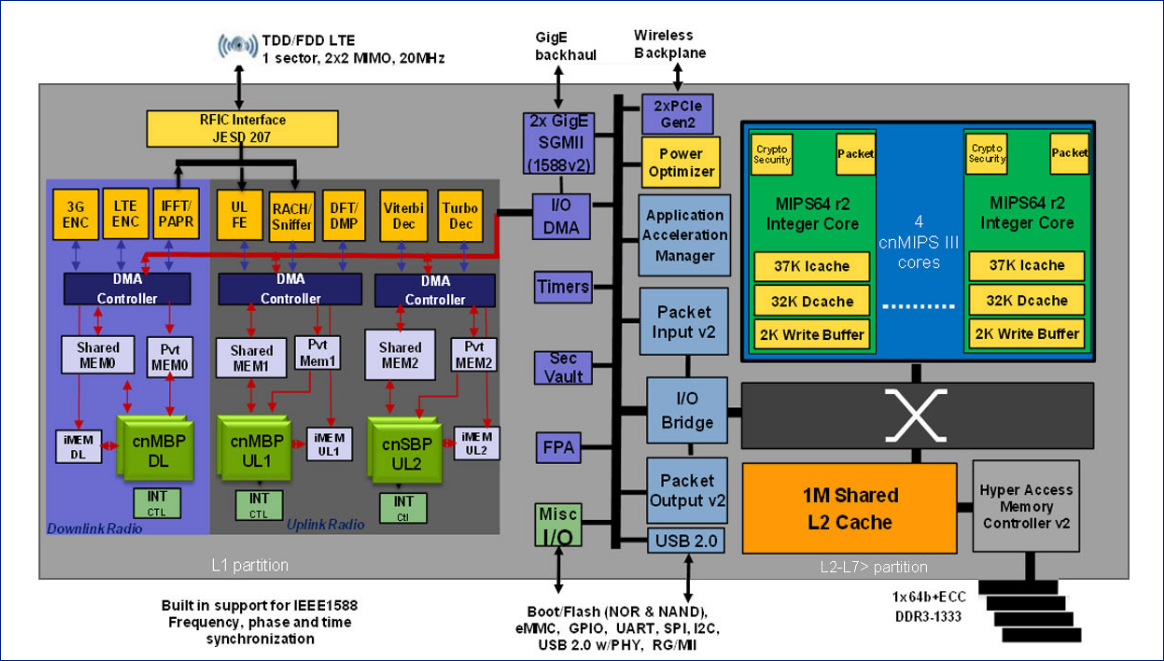

A 5G SoC will be an extremely complex system with many moving parts. An example baseband unit is shown below; it’s but one way to do this. The communications, computing, and memory requirements are substantial, and there are many blocks that have to work together seamlessly for the SoC to effectively perform its duties.

Given that the full range of performance of 5G components must be tested pre-silicon, what options are there? In reality, there’s only one: emulation. The obvious alternative, simulation, runs far too slowly to achieve anything remotely useful with such a diverse and exacting range of tests. Emulation performs on the order of 1000 times faster than simulation, making it possible to test real-world scenarios involving both hardware and software.

Figure 2 - The OCTEON™ Fusion® CNF7100 baseband processor (Source: Marvell)

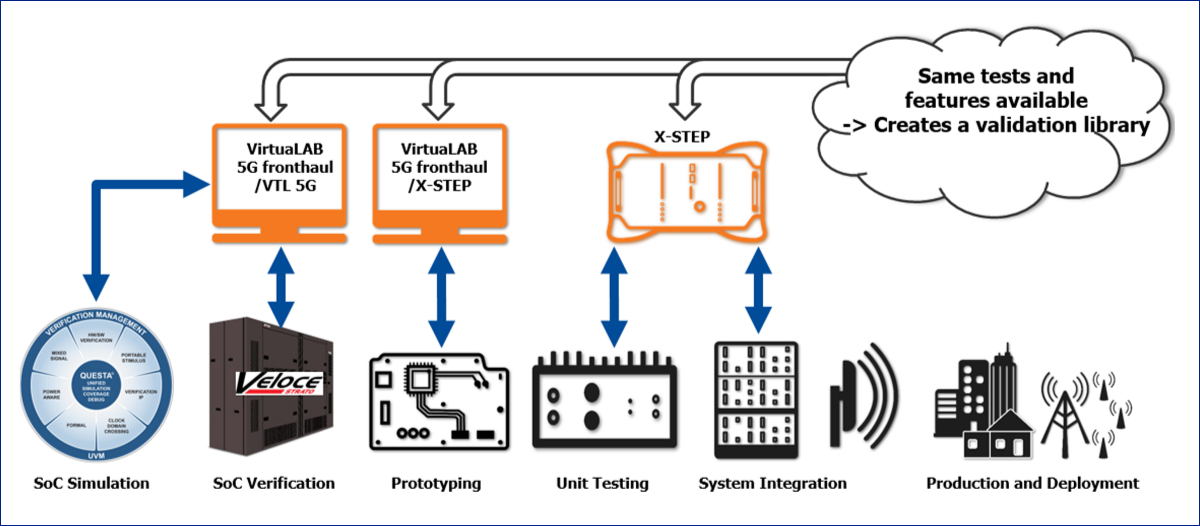

Using real equipment to generate the data streams used for verification might seem like the logical way to go, but this has three main weaknesses. First, the data rate coming from the cable doesn’t naturally match the testing rate within the emulator, making necessary the use of rate adapters. Second, these connections and rate adapters must be manually connected, making a centralized data-center emulation model impossible.

Finally, and most importantly, that traffic input is not predictable – and it’s not repeatable. If an error occurs, it’s difficult to go back and replay the event when trying to debug the problem. It’s far better to have a data source that’s deterministic, reproducible, and scalable – meaning that connections can be made to scalable units in a data center without manual intervention.

These are key traits of an emulation system when accompanied by virtualized protocol test modules, such as the VirtuaLAB units and other comprehensive pieces of verification IP that can be instantiated within or connected to an emulator. Each protocol used to connect 5G system components can be modeled and virtualized to drive and receive data from the design-under-test (DUT). And they can be instantiated remotely in a data center.

Figure 3 - 5G fronthaul development flow (Source: Mentor, a Siemens Business)

Emulation is also more accurate because all pieces of the test system are clock-aligned. If a failure occurs, we can track exactly which cycle it occurred on and correlate that with the exact input data and system state in place when the error happened. This takes an enormous amount of guesswork out of the debugging process, streamlining efforts to correct design flaws and deliver a fully verified design for mask generation.

Finally, huge portions of the test plan and results used for pre-silicon verification can be used directly for post-silicon verification. That eliminates an enormous amount of test generation work, and it makes debugging far easier in the event that any issues arise in the silicon check-out. By replacing the virtual DUT with a physical silicon chip, all of the infrastructure brought to the verification process can be re-used to confirm that the silicon does indeed work as expected. That includes the testing of the wide range of configurations and use cases needed for pre-silicon verification.

5G DEMANDS EMULATION

5G’s very strength – flexibility, configurability, and broad range of uses – makes verifying 5G SoCs extremely difficult. High complexity and diverse use cases present a huge challenge for teams trying to get their 5G silicon to market quickly and with highly competitive features and performance. The old ways of verifying and stressing silicon after a first round of chips has been built is no longer viable. There are too many options to consider; there are too many configurations and architectures that must be proven out.

Emulation, combined with a rich assortment of virtualized versions of the many protocols that 5G will require, is the only practical way of ensuring that the first round of silicon built will be the production version, able to handle all of the functions and configurations that it might be faced with and having the tight performance characteristics needed for successful integration into a 5G system.

These SoCs will be large and complex. But, with capacity as high as 15 billion gates, Emulators can handle the largest 5G designs, along with the accompanying virtualized components necessary for a thorough check-out of any design.

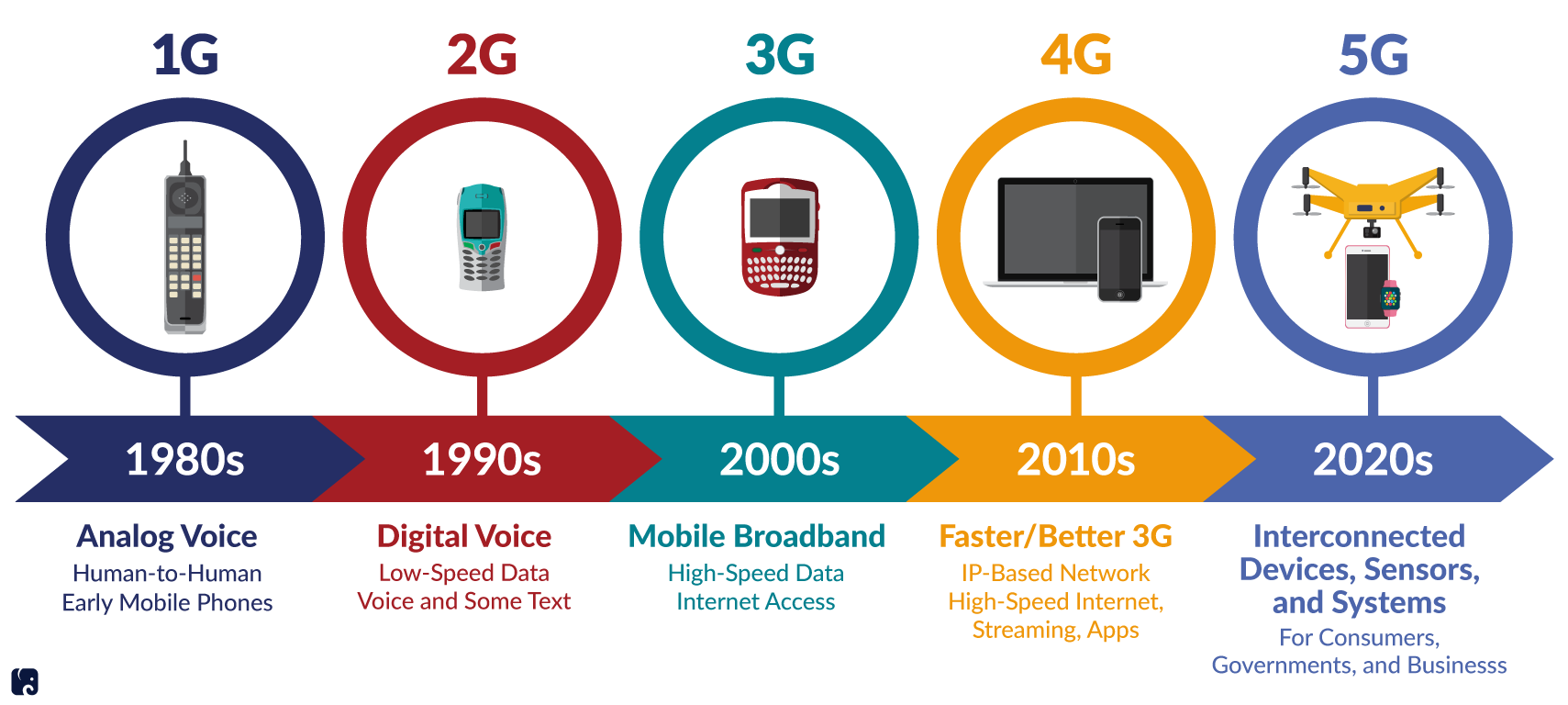

Figure 4 - 5G evolution (source: RPC)

References

www.mentor.com